In July, I had done a card sort study for the section of the website I was helping to redesign. Particularly since the new portal I’ve been working on doesn’t have as clear cut categories, we decided to do another card sort.

Reflections

Just a Few Number of Sessions worked fine. The first time we did the study, we did 5 group sessions and found that we began finding the same results, especially after refining it the first time. We only did 4 group sessions this time and we still found after the 3rd session, we found nothing new (though that may have had something to do with the make-up of the 4th group).

Timing was an issue. Although it was somewhat an issue the first time too (because it was summer), but this time was almost worse because I had less time between advertising and carrying out the study. And, although there were a lot more people on campus, the study was carried out around midterms. Thus, it was even more difficult to schedule people into the same times.

Advertising online worked 100x better whether it was e-mailing certain mailing lists, posting on the psychology department’s list of surveys, or e-mailing previously interested people who’s schedule just didn’t work with ours for the first study versus posting paper posters around campus.

Getting people to think in the right mind frame was again an issue. I won’t go into this too much though it was interesting that I found students to have less problems with this than those who worked on campus. I will not even begin to theorize why particularly since that was a trend over only 9 groups of participants.

Participants can be a great source. As we were doing another closed card sort, we had pre-set categories, but one of the participants in the first group came up with a much better categorization by adding a couple of categories, while removing one, creating less ambiguous categorization.

Analysis

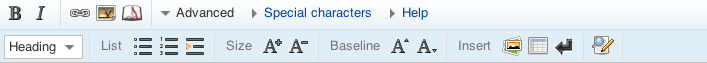

As I didn’t write about this last time, I thought I’d write a little bit about analysis this time (I used the same method). After gathering the results (simply by writing down the numbers of the sticky notes), I entered them into xSort, a free MAC card sort statistical program. The program also allows sessions for participants to enter data, but is designed for individuals rather than groups, so I opted to put in the results myself and using it primarily for analysis.

Statistical Analysis

The program provided the standard distance table and cluster tree results. The cluster tree options included single, average, and complete linkages. From what I have read of the literature, it seems as if using average linkage trees is the most common and I did find that single linkage gave many more branches (and generally more groups too), whereas complete linkages gave few groups but also many more outliers when using a cut off in the standard range of 04.-0.6. Average linkage gives a good balance between the two, but of course, I did not simply take the cluster tree and turn that into a new IA.

Subjective Analysis

During the study, I had also taken a lot of notes on labels that participants found problematic and their suggestions. I also took notes on item categorization that participants found difficult to put into a single category, which was generally reflected in the cluster tree as well by tending to be the outliers or items that were not categorized.

Using the Results

Using the average link cluster tree, I used that as a basis for an IA. Many of the problematic labels identified in participants’ comments were renamed to better reflect the content that a link would point to, which also helped putting them into the right category. One link we ended up never putting into a category and decided to work it into the design outside of the categories that we had created. This version of the IA was then put forward as a draft which will hopefully see little change before the “final” version is made for the portal.